Kubernetes Fundamentals for Beginners: Architecture, Key Components & How It Works

Understanding Kubernetes Architecture & Components

What is Kubernetes

Kubernetes, commonly known as K8s, is a powerful open-source container orchestration platform originally developed by Google. It helps manage, scale, and automate containerized application deployment across various environments, including on-premises, cloud, and virtual machines.

As this preference shifts toward containerized applications, managing those at scale is tough. That's where Kubernetes or K8s fits in.

Problems Before Kubernetes Manual Container Management

Manual Container Management – Running multiple containers across different environments requires extensive manual effort

Scalability Issues – Handling increasing workloads meant manually adding or removing containers.

Resource Optimization – Containers often consume more resources than needed, leading to inefficiencies.

Service Discovery & Load Balancing – Applications needed a way to distribute traffic efficiently.

Fault Tolerance & Self-Healing – If a container failed, it had to be manually restarted.

How Kubernetes Solves These Problems

✅ Automated Deployment & Scaling – Kubernetes automatically scales applications up or down based on demand.

✅ Self-Healing – If a container crashes, Kubernetes restarts it automatically.

✅ Load Balancing – Distributes traffic across multiple containers to ensure high availability.

✅ Efficient Resource Utilization – Optimizes CPU and memory usage by scheduling containers smartly.

✅MULTI-CLOUD and HYBRID – Run in/on-prem, cloud environments, or hybrid combinations seamlessly.

Top Container Orchestrators Competing with K8s

While Kubernetes is incredibly powerful and widely adopted, it's not the only container orchestrator in the game! Surprising, right? Several alternatives compete with Kubernetes, each offering unique advantages.

Here are some notable ones:

Docker Swarm – A simple and native clustering solution for Docker containers.

Docker Swarm is not very good for large-scale deployments because it is not scalable and lacks many advanced features compared to Kubernetes.

Marathon- Mesos-based orchestrator that can handle massive applications.

Amazon ECS – A fully managed container orchestration service by AWS.

Azure Service Fabric-Microsoft Solution designed for optimizing use in Microservice architecture. HashiCorp Nomad: A lightweight orchestrator for both containerized and non-containerized workloads

Kubernetes Deployment Options: Local, Cloud and Enterprise Solutions Explained

There are different "flavors" of Kubernetes, depending on how and where you want to run it. Let's explore the options!

1️⃣ Local Kubernetes (For Development & Testing)

Run Kubernetes on your machine for local development and testing with the following options:KIND (Kubernetes in Docker)

Docker Desktop (includes Kubernetes)

Minikube2️⃣ Cloud-Managed Kubernetes (For Production-Ready Deployments)

Major cloud providers offer managed Kubernetes services, which simplify the cluster setup process:Google Kubernetes Engine (GKE)

Amazon Elastic Kubernetes Service (EKS)

Azure Kubernetes Service (AKS)

Oracle Container Engine for Kubernetes (OKE)

The services will handle the infrastructure, scaling, and updates while keeping you centered on your applications.3️⃣ Enterprise Kubernetes (For Large-Scale Businesses)

Advanced security, governance, UI-based management: Organization needsRedHat OpenShift

VMware Tanzu

RancherMirantis Kubernetes Engine (MKE)

Enterprise features, easier management, with integrations added.4️⃣ DIY Kubernetes (For the Brave!)

For those who want full control over the infrastructure of Kubernetes:

🔹 kubeadm – Official Kubernetes tool used to manually set up clusters on bare-metal or VMs

🔹 K3s – A lightweight Kubernetes distribution for edge computing or small deployments.

🔹 Self-hosted Kubernetes – Build and manage a cluster from scratch, offering maximum flexibility and customization

For those who want full control and deep learning, you can build Kubernetes from scratch on bare-metal servers or virtual machines. This gives flexibility but requires expertise.

Kubernetes Components

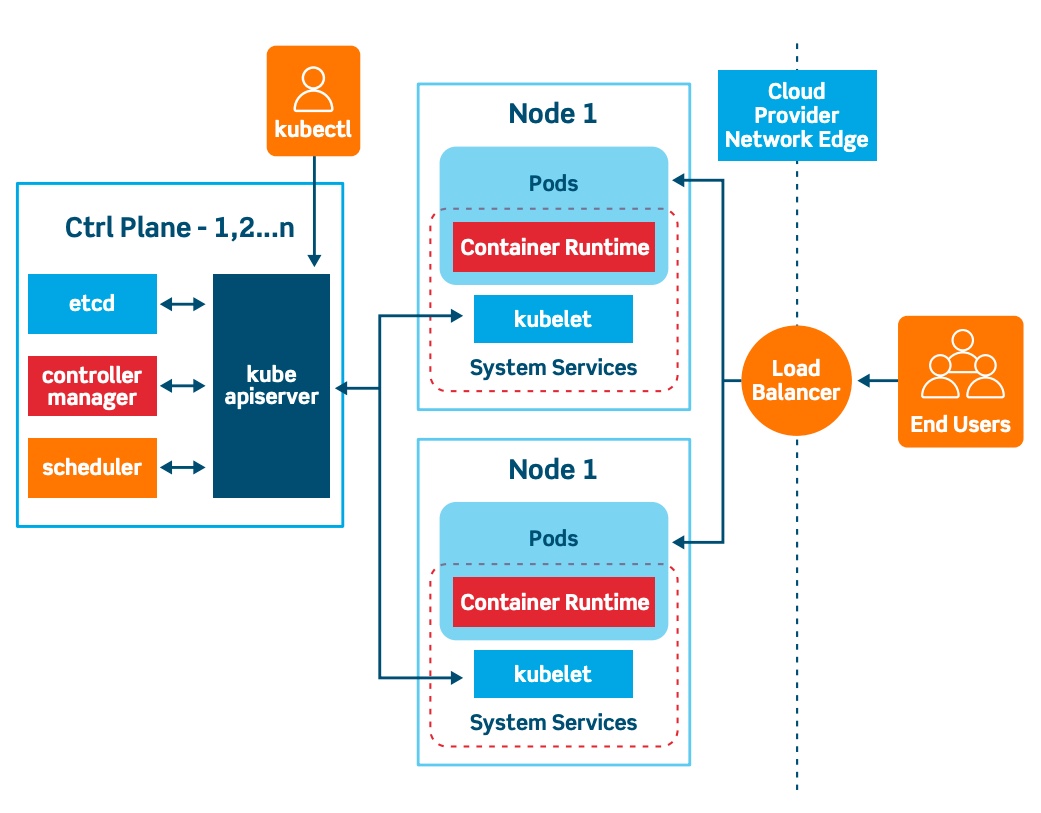

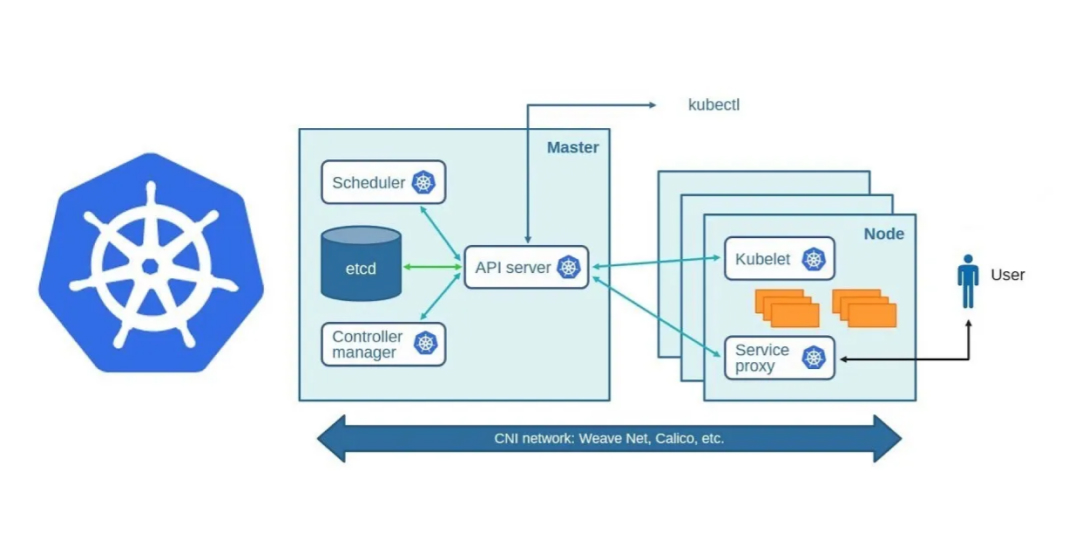

A Kubernetes cluster consists of nodes, which can be physical or virtual machines. It has:

Master (Control Plane) – Manages the cluster, schedules workloads, and maintains the desired state.

Worker Nodes – Run application containers. For production, use at least two nodes (ideally multiple master nodes) to avoid a single point of failure.

Here’s a quick overview of the master node components:

ETCD

Controller Manager

Scheduler

API Server

ETCD

etcd is a highly available distributed key-value store. It acts as the source of truth for all Kubernetes configurations and cluster states and stores metadata needed by the cluster for proper operation.

Imagine etcd as Kubernetes' memory’—it remembers everything about the cluster, including node statuses, pod placements, service discovery, and configuration changes.

How Does etcd Work in Kubernetes?

Whenever you make a change to Kubernetes—whether it's deploying an application, scaling replicas, or updating configurations—this information is**:**

Sent through the Kubernetes API Server (via kubectl, YAML files, or REST API calls).

Stored in etcd as a JSON-formatted key-value pair.

Versioned, meaning you can track changes over time. Watched by Kubernetes components, which automatically react to any changes.

Key Features of etcd

Distributed & Highly Available – etcd runs across multiple nodes, thus no single point of failure Versioning- Every change is stored with a history, which allows rollback to previous states.

Real-time Watch Mechanism – etcd keeps scrutinizing changes and automatically updates the Kubernetes components accordingly.

Persistence & Backup – Regular backups of etcd help in cluster recovery in case of failure

Why is etcd Critical in Kubernetes?

Without etcd, Kubernetes wouldn’t function, as it wouldn’t know what’s running in the cluster.

A corrupted etcd database means loss of cluster state, making disaster recovery crucial.

In production, etcd is typically replicated across multiple nodes to prevent data loss.

API SERVER

The API Server serves as the entry point for all communications into Kubernetes. It serves the Kubernetes REST API, which is used in accessing the cluster by users, applications, and kubectl.

What Does the API Server Do?

✅ Processes Requests - This would carry out all API requests from users (kubectl), controllers, and external systems

✅ Validates & Authenticates - All incoming requests are verified to be properly formatted and authorized.

✅ Communication with etcd – Saves and fetches data related to clusters in etcd.

✅ Trigger Cluster Events – It will alert the controller and scheduler to cluster changes.

How it works

A user runs kubectl apply -f deployment.yaml

The API Server:

1. Listens to the request

2. Verify and authenticate it

3.It stores the same in etcd

4. Scheduler and Controller Manager get notified

5. Kubernetes does

6. Pod creation on a worker node.

Controller Manager: The Brain of Kubernetes Orchestration

The Controller Manager brings the desired cluster state to the actual state by running multiple controllers. Every controller runs a specific job while monitoring the cluster at all times, and taking action appropriately

What does the Controller Manager do?

✅ Watches for cluster state – It continuously checks to see if everything runs as expected.

✅Triggers corrections - It makes sure Kubernetes fixes something if things go wrong, such as a pod crashing.

✅Manages multiple controllers - Each controller is responsible for a different thing, which includes:

✅Node Controller - Detects and responds when nodes go down.

✅Replication Controller - Ensures many pods are running.

✅Service Account Controller - Manages API tokens for the service accounts.

How It Works

1. A pod crashes unexpectedly.

2. The Controller Manager has to identify the failure.

3. It instructs Kubernetes to reschedule a new pod on a healthy node.

4. The API server updates etcd about the new state of the cluster.

Scheduler: The Decision-Maker of Kubernetes

The Scheduler determines where new pods should run. It ensures workloads are spread across nodes with available resources.

What Does the Scheduler Do?

✅ Assigns pods to nodes – Determines the best node for each pod based on resource availability.

✅ Considers constraints – Evaluates CPU, memory, affinity rules, and taints/tolerations.

✅ Ensures workload balance – Prevents resource overloading on a single node.

How it Works

1. A new pod is created but remains pending.

2. Analyzer by the Scheduler based on cluster resources; hence, a suitable node is found.

3. It then assigns the pod to the best node and updates the API Server.

4. The kubelet on the chosen node starts running the pod.

Worker Nodes

Of course, the master node does a lot, but like a manager, it's nothing without the workers.

Kubelet: The Node's Caretaker

The kubelet is a small but essential agent running on every worker node. It acts as the bridge between the control plane and the containers running in pods.

What Does the Kubelet Do?

✅ Ensures Containers are Running – If a pod is scheduled to a node, the kubelet ensures its containers are running.

✅ Communicates with the control plane – Listens to instructions from the API server and acts accordingly.

✅ Manages the lifecycle of containers – Pulls images, starts or stops containers, and keeps track of their health, etc.

✅ Works with a container runtime – It uses Docker, containerd, or CRI-O to run containers.

How Kubelet Works

1. The scheduler assigns a pod to a worker node.

2. The kubelet receives commands from the API server.

3. It works with the container runtime to pull images and run containers.

4. It is constantly monitoring pod health and sending back reports to the control plane.

Kube Proxy: The Network Manager of Kubernetes

The kube-proxy is an essential part of a worker node. It takes care of the networking and makes sure that services and pods communicate smoothly with each other.

What Does Kube Proxy Do?

✅ Manages Network Rules – Uses iptables or IPVS to route traffic within the cluster.

✅ Handles Pod-to-Pod Communication – Ensures that pods can talk to each other, even across different nodes.

✅ Implements Service Discovery & Load Balancing – Routes traffic to the appropriate pod when a service is accessed.

✅ Supports External Access – Allows services inside the cluster to be exposed externally when needed.

How Kube Proxy Works

1️⃣ A pod wants to talk to another pod or service.

2️⃣ The kube-proxy intercepts the request and finds the right destination.

3️⃣ It updates network rules dynamically to forward traffic efficiently.

4️⃣ If a pod dies, kube-proxy reroutes traffic to the healthy pods.

Container Runtime: The Engine Behind Containers

The container runtime is the software that runs your containers. Kubernetes supports multiple runtimes via the Container Runtime Interface (CRI).

Common Container Runtimes

Docker – The best-known, although deprecated in Kubernetes (still supports Docker-built images).

containerd – A lean runtime, nowadays default in Kubernetes.

CRI-O – specifically designed for Kubernetes.

How it works on a Worker Node

1. Kubelet instructs the container runtime to pull an image.

2. The runtime downloads and stores the container image.

3. It then runs the container inside the pod.

4. Kubelet monitors the container's health and restarts if needed.

kubectl is a CLI tool that interacts with the API server to perform cluster management tasks.

kubectl: Your Command-Line Gateway to Kubernetes

kubectl is the command-line tool that lets you interact with your Kubernetes cluster. It communicates with the API server to manage cluster resources.

Common kubectl Commands

✅ kubectl get pods – Lists all pods.

✅ kubectl describe pod <pod-name> – Shows detailed info about a pod.

✅ kubectl apply -f <file>.yaml – Deploys resources from a YAML file.

✅ kubectl delete pod <pod-name> – Deletes a specific pod.

How It Works

1️⃣ You run a kubectl command.

2️⃣ The request is sent to the Kubernetes API Server.

3️⃣ The API Server talks to etcd and the necessary controllers to apply the changes.

Networking & External Access

- CNI: Container Network Interface - The Network Plugin System

Kubernetes itself does not handle networking. It utilizes CNI (Container Network Interface) plugins to manage pod-to-pod communication.

Popular CNI Plugins

Flannel – Simple overlay networking.

Calico – Provides networking + network security policies.

Cilium – Made use of eBPF for high-performance networking.

Weave???? - Simple, easy-to-use networking with encryption.

How CNI Functions

1️⃣ A pod is scheduled on a node.

2️⃣ The CNI plugin assigns it an IP address.

3️⃣ It configures routing rules so that it can reach out to other pods.

4️⃣ If network policies are present, they are enforced.

- Cloud Controller Manager: The Cloud Provider Bridge

If you’re running Kubernetes on a cloud provider (AWS, GCP, Azure), the Cloud Controller Manager (CCM) is responsible for integrating with cloud-specific services.

What Does the CCM Do?

Manages Load Balancers – Automatically provisions cloud-based load balancers.

Handles Cloud Authentication – Integrates with IAM roles and security policies.

Manages Cloud Nodes – Ensures Kubernetes nodes match cloud VM instances.

Manages Persistent Storage – Dynamically provisions cloud-based volumes.

How It Works

1️⃣ Kubernetes schedules a pod requiring a LoadBalancer service.

2️⃣ The Cloud Controller Manager talks to the cloud API to create a cloud load balancer.

3️⃣ It updates Kubernetes with the assigned external IP.

Summary:

Kubernetes is a highly scalable container orchestration platform that can automate the deployment, scaling, and management of containerized applications. Built on a strong architecture of master and worker nodes, Kubernetes promises high availability and efficiency. Master and worker nodes form the fundamental blocks of a Kubernetes cluster, while etcd, API server, controller manager, and scheduler play crucial roles in maintaining the desired state of the system. Thus, one must know all these basics to achieve smooth application management in cloud environments.